There are two different operations: training and identification. The latter is often called "OCR".

The world isn't perfect and neither are recognizers. Ideally, you want to train this recognizer with perfectly labeled characters. Suppose the input is not ideal.

How do we handle errors in labeling? For each class, form an average template by aligning the centroids, counting the ON pixels at each location and thresholding to N/2, where N is the number of instances in the class. Then compare each instance with the average (using correlation) and reject any with a sufficiently low score, which might be a score below 0.8. The average templates can be recomputed again if any instances have been removed.

Suppose for some reason that there are no labeled characters for training. If you have already built a recognizer for the same restricted character set that has similar letterforms, that recognizer can be used to bootstrap a recognizer for the images of interest. The unlabeled input are correlated with the existing recognizer, and if an instance has a sufficiently high score with one of the templates in the existing recognizer, it is given that label and added to the instances of the new recognizer.

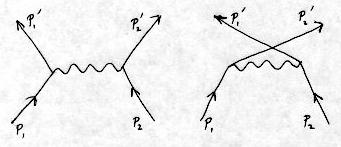

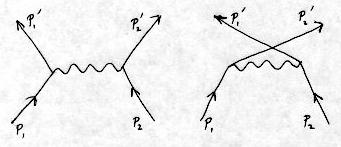

There are two basic choices in the use of a recognizer:

When identifying a character, the centroids are aligned and the correlation is found. Because of the discretization of the lattice, a higher score is often found when the centroids are misaligned by +- 1 pixel either horizontally or vertically. Consequently, to find the maximum score it is necessary to do the correlation at nine locations.

Individual characters are input for training, but when the recognizer is used for identification, you often have several touching characters. To split them, one can apply various heuristics on properties of the binary image, such as the vertical projection profile. However, such rules are highly error-prone, and both the rules and the results depend strongly on the character set and the weight of the strokes in each character.

If we have accurate templates of the individual characters and know with good accuracy the sum of the width of the printed character and the expected space between that character and the following one (this sum is called set-width), then one can apply a MAP (maximum a posteriori) approach to find the most likely set of characters that would generate the resulting image. This also requires an estimate of the "channel" bit-flip probabilities, but just about any estimate will suffice. This communication theory approach to OCR is called Document Image Decoding, and was pioneered by Gary Kopec and Phil Chou in the early 1990s. For simple grammars such as ordinary text, this can be implemented by dynamic programming, where the log likelihood for each template to be located at each pixel position is used.

Our interest here is not to do the actual decoding (aka OCR) on the connected component, but just to do the segmentation. For some character sets that have similar character widths, a greedy extraction method works reasonably well. Find the score for each template in each pixel position across the connected component, and select the template and position for which the score is maximized. Excise the rectangle bounding the template (and save it). This typically leaves two rectangles, on on the left and one on the right. Apply a filter such that if a rectangle is too narrow to be one of the characters in the character set, it is discarded. Apply the same operation to any pieces that are not filtered. At the end, we have a set of rectangles that cover the initial component, and the segmentation is finished. The image pieces in these rectangles are then sent to the recognizer.

How do we decide on the vertical position of each template as it marches across the component? For generating the average templates and for identification, we align the centroids. So we compute, for each template (width), we apply a sliding window and within the window at each horizontal location, find the vertical location the centroid. As with averaging and identification, we compute at all locations within +- 1 pixel; this results in three correlations at each horizontal pixel position.