Some introductory material on

elementary particles

How did we get here?

What is a fundamental particle?

Quark and lepton interactions:

the weak and electromagnetic forces

QED in a little more detail

A more modern view of the weak interaction

Quark interactions: the color force

So where does the old "nuclear" force come from?

Symmetry and angular momentum: particles are

classified as either fermions or bosons

Internal symmetry and interactions

Tables of leptons and quarks

The four spectroscopies

For further reading

There are many references for information and answers to questions about physics. I list some useful books at the end of this primer. Additionally, you may be amused and/or enlightened by some of the following:

In 1869, Mendeleev assembled the known elements into the Periodic Table, grouping them according to their chemical similarities and in order of ascending atomic weight. The table is of great use in predicting the chemical properties of atoms. However, the meaning of the ordering was not well understood until 1913, when Moseley realized that the atoms were ordered by the number of electrons, rather than the weight of the nucleus. You might find it strange that it took 44 years to finally understand the table, but consider: the electron was discovered in 1896, and the nucleus of the atom was discovered by Rutherford in 1911. And Bohr made his simple theory of the hydrogen atom in 1912, which explained the much of the absorption and emission spectrum of hydrogen, using a model where the electrons were confined to discrete orbits with angular momentum in discrete units given by Planck's constant divided by 2 pi. (Planck's constant is the mysterious fundamental unit of the quantum world, a tiny number, 6.67 x 10-27 erg-seconds, which assumed its proper place in the laws of nature only after the quantum mechanics revolution of the mid 20s. The angular momentum of the orbiting electrons was cleverly assumed by Bohr to be in units of 1.05 x 10-27 erg-seconds.) In 1913, nobody knew about the neutron. It was assumed that the nucleus was composed of a large number of positively-charged protons and a smaller number of negatively-charged electrons, such that the total number of electrons, both within and outside the nucleus, equalled the number of protons, and thus making the atom electrically neutral.

It wasn't until the 1920s, with the advent of quantum mechanics, that the periodic table was really understood. Quantum mechanics describes with great accuracy how electrons behave in an atom. One of its first successes was the enumeration of the energy levels that the electron can occupy in the simplest of all atoms: hydrogen. The properties of the wave functions for a single electron (specifically, its angular momentum and spin quantum numbers) immediately gave the magic numbers that had been observed in the periodic table: 2, 8, 18, ... These are the increments in the atomic number (number of electrons) before the chemical properties tend to repeat. The explanation was simple: the electrons in atoms heavier than hydrogen successively fill the orbits that are allowed in the hydrogen atom. Of course, there is some interference between electrons, which makes the details of the science of chemistry and atomic physics more complicated than this simple picture. But the fundamental fact is that the quantum mechanics of a single electron in the hydrogen atom gives a good first approximation to the chemical properties of the elements!

The neutron was finally discovered, in 1932, by Chadwick. This discovery cleared up some serious puzzles. One such puzzle involved the nucleus of the nitrogen atom. Protons and electrons were known to have "spin 1/2," which means, they act as if they are little spinning tops with angular momentum equal to half of Planck's constant (divided by 2 pi). The nitrogen nucleus acted as if it had an even number of spin 1/2 particles in it, whereas the pre-1932 theory required 21 of these particles inside (14 protons and 7 electrons, to give a +7 total charge, which was balanced by 7 negatively charged electrons outside the nucleus that are responsible for all the chemistry of nitrogen). Finally, with Chadwick's discovery, it was understood that there were no electrons in the nucleus: nitrogen has 7 protons and 7 neutrons, for a total of 14, which is an even number, as had been observed. Another puzzle that was resolved by the neutron involved the assumed confinement of electrons within the nucleus. This was problematical due to Heisenberg's uncertainty principle, formulated in 1925. We will get to that later.

In that same year, 1932, the positron, which is the antimatter version of the electron, was discovered by Carl Anderson. The positron dramatically confirmed Dirac's relativistic quantum theory, which he had developed 3 years earlier and which predicted the existence of the positron, as well as the fact that both the electron and positron should have spin 1/2. Thus by 1932, there were five particles, all supposedly elementary, that were known:

We'll talk about the meaning of the spin below; for now, it can be understood as an intrinsic, non-classical (i.e., purely quantum mechanical) property of all particles, whereby they have an internal angular momentum, given in integer or half-integer multiples of Planck's constant (divided by 2 * pi).

Further advances were made in the 1930s. Some nuclei are unstable, and they were found to decay in one of three ways:

In weak interactions, it was found that the energy of the emitted electron or positron was not constant. There was a distribution of energies, with nonzero probability for all energies between a lower energy bound and an upper energy bound. Now, a basic postulate of quantum mechanics is that each state of the nucleus, before and after decay, has a definite energy and is labelled uniquely by a set of quantum numbers. Conservation of energy demands that the energy of the emitted particle(s) is equal to the difference of nucleus energies before and after the decay. Thus if the electron can have a variable amount of energy when emitted from a nucleus in a known quantum state, there are only two possibilities: either (1) there is another, unobserved particle, that carries away some energy, or (2) energy is not conserved in the weak interactions. Some distinguished physicists wasted time on the second choice. Wolfgang Pauli made the first and more likely choice (more likely because conservation of energy is related to a fundamental assumed symmetry of nature: that the laws of physics do not change over time!). He named the unobserved particle the neutrino, which means "little neutral particle." Enrico Fermi then postulated a universal weak interaction that was responsible for all weak decays. These "weak decays" also tend to be very "slow," by which we mean it can take a relatively long time (seconds, days, years, or millions of years) before the nucleus emits the particle. This is in contrast to the electromagnetic decays, where a photon is typically emitted in a tiny fraction of a billionth of a second. The strong interaction decays, where helium nuclei are thrown out of the nucleus, are typically even faster than the electromagnetic decays, which is one reason they were called "strong." Although these decay times are typically ordered as "strong" << "electromagnetic" << weak, there are many complicating factors involved, depending on the various quantum states of the nuclei, "selection rules" that determine which transitions are "allowed" between the states, etc.

A typical heavy nucleus such as uranium will undergo a cascade of decays of different types, shedding protons and neutrons with alpha decay, energy only with gamma decay, and changing neutrons into protons (or v.v.) with beta decay. Uranium ends up as lead, converting about 0.1 percent of its original mass, or 1/4 the mass of a proton, into energy. The overall decay half-life of uranium is about 4 billion years, and essentially all of that half-life is spent waiting for a weak decay. Much of the phenomenology of nuclear physics is contained in a graph called the curve of binding energy . The binding energy (energy per nucleon) is a maximum for the most stable element nucleus, which is iron. Elements that are heavier than iron will give up energy if they break up to form iron; lighter elements will gain energy if they "fuse" to form iron. For this reason, iron is the "end point" in the cycles of nuclear burning in stars. As might be expected, most of the stellar energy is liberated in the fusion of four hydrogen nuclei to form helium; relatively little additional energy is given off as the helium combine to form carbon, oxygen and, eventually, iron.

The strong force was particularly puzzling. In analogy with the electromagnetic interaction, which was fairly well understood quantum mechanically in the 1930s, Yukawa guessed that the strong force was due the exchange of a medium weight particle, which he named a meson, between two nucleons. (The proton and neutron were called "nucleons".) This idea about exchanging unseen particles comes naturally from a description of physical laws that incorporates quantum mechanics and relativity. It is called quantum field theory because it permits particles to interact via a "field" (see below) of "quantized particles", and it requires the generation and destruction of these quanta, according to rules that depend on the specific interaction. From his theory and from measurments of the range of interaction of the strong force, Yukawa predicted that his meson would have a mass of about 200 times that of the electron mass, and about 1/10 the mass of the proton. Soon thereafter, in 1936, a cosmic ray particle was discovered with this approximate mass. (Cosmic rays are charged particles, mostly from the Sun, which enter the Earth's atmosphere. The cosmic rays that are observed at ground level are primarily particles that are created in the upper atmosphere and travel down from there.) Although this was initially taken to be a verification of Yukawa's theory, it was quickly discovered that the particle, now called a muon, is really a heavy cousin of the electron, and has nothing to do with the strong force. (Its detection at the Earth's surface was, however, a striking confirmation of Einstein's special theory of relativity. The only way a short-lived muon was likely to travel several miles through the Earth's atmosphere was for its internal "clock" -- i.e., a hypothetical clock zipping along with the muon -- to be going very slowly, as observed on the Earth, because the muon was traveling at nearly the speed of light through the Earth's atmosphere.) Soon thereafter, another particle, the pion or "pi meson," was observed in experiments with cyclotron accelerators. It had the predicted mass and interacted with protons and neutrons by the strong interaction. This was the particle that Yukawa had predicted to mediate the strong interaction. And for the next 30 years, from 1940 to 1970, the pion was considered to be one of the fundamental particles responsible for the strong force. Because the negatively charged pion decays "slowly", in a few billionths of a second, via the weak interaction, into an electron and a neutrino, it is a little disconcerting to consider it truly elementary, but in that period physicists were willing to make a liberal interpretation of what constituted an elementary particle. (Roughly speaking, if it lived long enough to decay by the weak interaction, it was elementary; otherwise, if it decayed by the strong interaction it was called a resonance. Resonances were created in particle accelerators, and the existence of a resonance was inferred from an increased probability for scattering when the incoming particles had enough energy to create the "resonance" particle. In fact, there was a distribution of energies in which the probability was larger for making a resonance particle, and the lifetime of the particle was so short that it could only be inferred, via the Heisenberg Uncertainty Principle, through the width of that energy distribution. The shorter the lifetime, the greater the variation in the energy of the resonance -- also spracht Heisenberg.)

But as we now understand it, the pion is not fundamental, and the strong force of nuclear physics is not the real strong force of the universe.

We now abandon a chronological overview, and zip forward to 1970, picking up some of the discoveries between 1932 and 1970 retrospectively.

The most recent watershed in the understanding of the basic constituents of the universe occurred in the years surrounding 1970. Before 1970, the smallest particles of matter known had been assembled into two families of leptons (the electron and its neutrino; the muon and its neutrino) and two very different types of relatively heavy particles, mesons and baryons, collectively known as hadrons. As it turned out, none of the hadrons is elementary -- they are all made out of particles with fractional charges named quarks by Murray Gell-Mann in 1964. The mesons are made from quark/anti-quark pairs, and the baryons, of which the lightest are the proton and neutron, are composed of 3 quarks. Initially, nobody, including Gell-Mann, believed in the actual existence of quarks. They were a useful construct that explained the properties of several hundred mesons and baryons that had been observed. As mentioned above, many of these hadronic "particles" were called resonances because they existed for a very short period of time, less than a billionth of a trillionth of a second, about the time it takes light to cross the nucleus of an atom! However, in the decade between 1964 and 1974, the evidence built up for the existence of quarks, and since the mid-70s, it has been generally accepted that the best model we have for the fundamental building blocks of matter is that everything is composed of leptons and quarks, plus the particles that provide the interactions between them.

What distinguishes a fundamental particle from one that is not? The leptons and quarks are fundamental because they are believed to be point particles that are not composites of other more fundamental particles. Of course, the history of physics is replete with claims, eventually shown to be false, that the most basic, fundamental and indivisible particles had been found. Like the mesons and baryons, leptons and quarks may eventually be shown to be composites of other particles, but the description we now have appears to be valid to energies several thousand times that of the proton mass.

Leptons and quarks have a lot in common. They are both assumed to obey the same equation of motion (the Dirac equation), and they both have an intrinsic angular momentum ("spin") equal to 1/2 the fundamental quantum unit of spin. They are called fermions and obey the Pauli Exclusion Principle, which keeps the electrons in an atom (and hence the atom itself) from collapsing into a very low energy state. There are three known families for both leptons and quarks. Leptons in the first family are the electron and its neutrino, a chargeless and nearly massless version of the electron, and their anti-particles the positron and the anti-neutrino. There are also two quarks in the first family, designated "up" and "down". But the quarks have properties so strange that it took nearly 10 years from the time they were proposed in 1964 until they were widely accepted as existing. First, they have non-integral charges of -1/3 and +2/3, in units of the charge on the electron, and nobody has ever seen a free particle with fractional charge. Thus, the quarks must be permanently trapped within particles like the proton and neutron. And to keep them trapped, they must interact by an extremely strong force with the unusual property that the force between quarks increases with distance(!), which is unlike any of the better understood forces such as gravity and electromagnetism.

All of this was a lot to understand, but the most amazing thing is that there exists a theory, called the Standard Model, that follows from a relatively small set of constraints called symmetry principles and explains virtually every experiment that has been done -- with a few fitted parameters, of course!

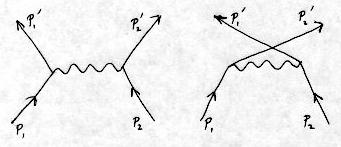

For example, here are the two second-order Feynman diagrams for scattering of two electrons:

There is a fundamental conservation law: the conservation of leptons. Lepton conservation must happen at each vertex. Because an electron is a lepton, you see at each vertex that an electron goes in and comes out. The number of leptons in the universe can never change, because all lepton interactions go through 3-point vertices such as the ones of QED or the electroweak interaction (see below).

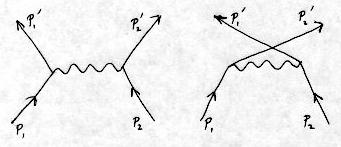

Here are the diagrams for electron-positron annihilation:

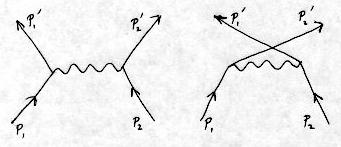

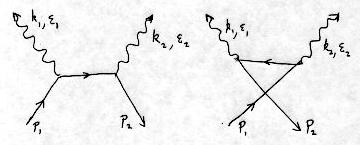

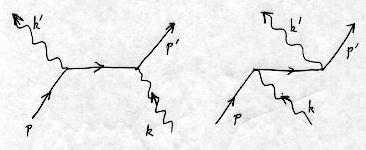

And finally, here are the diagrams for Compton scattering

of an electron by a photon:

These "principles" are just versions of Heisenberg's Uncertainty Principle. Here's what is important: a force is generated between two particles (such as two electrons, or an electron and a neutrino) when virtual particles (such as the photon, or the W or Z) are exchanged (i.e., emitted and absorbed).

Particle Charge Type of interaction(s)

----------------------------------------------------------------

lepton

electron -1 electromagnetic, weak

electron neutrino 0 weak

quark

up 2/3 electromagnetic, weak, color

down -1/3 electromagnetic, weak, color

----------------------------------------------------------------

What is new for the quarks is the color force. As mentioned above, until the 1970s, when it was finally understood that the hadrons (mesons and baryons) were composed of quarks, they were thought to be fundamental. The entire field of nuclear physics was concerned with how the neutrons and protons combined to form the observed nuclei. The nuclear force was observed to be an attractive force between all hadrons, that fell off quickly in a distance comparable to the size of an atomic nucleus (about 10-15 meters). Nuclear physics is concerned with attractive binding energies of about 10 Mev (10 million electron volts) per nucleon, approximately a million times greater than the atomic binding energy per electron of molecules and solids. The nuclear force was also studied with large accelerators, in collisions of protons with very high kinetic energies of up to 100,000 Mev. There were many phenomenological descriptions of the nuclear force over this large range of energies, but it was not understood in a fundamental way. The force was so strong that the interaction between nucleons could not be expanded in a perturbation series, as was done with QED. As a result, even the simplest calculations, such as the bound states of the deuterium nucleus (one proton, one neutron) could not be calculated with precision from first principles. In analogy with QED, where the force between charged particles is due to exchange of virtual photons, the nuclear force between hadrons was postulated to be due to exchange of virtual mesons, such as pions. Like photons which have spin 1, the mesons are bosons; the lightest mesons, the pions, have spin 0. But the dissatisfaction with this theory was so great that people came up with some pretty wild ideas in the 1960s, such as the "bootstrap" theory of Geoffrey Chew. In the bootstrap theory, hadron egalitarianism was taken to the extreme: none of the hadrons were fundamental because each baryon and meson was surrounded by a cloud consisting of all the hadrons, and somehow the observed hadrons were the mysteriously allowed composites of these clouds -- the hadrons were generated from all other hadrons by "pulling themselves up by their bootstraps." Likewise, the force between hadrons came out of these clouds by exchange of hadrons (presumably both mesons and baryons). Unfortunately, this scheme was so complex that little could be calculated.

I give you this background in some detail so that you can appreciate the breakthrough that occured with quarks. The force between quarks is much stronger than the nuclear force between nucleons. And yet it is possible, at least in principle, to calculate the forces between quarks within a meson or baryon. (In the lingo of quantum field theory, the interaction between quarks is renormalizable, just as QED and the electroweak interactions are. The reason these interactions are renormalizable is very deep, and depends on some abstract symmetries.) The basic interaction between quarks is called quantum chromodynamics (QCD), in analogy to QED. But unlike QED, where an uncharged particle (the photon) is responsible for the force between charged particles, in QCD, "charged" gluons are interchanged between quarks. But these gluons do not have electric charge; they have a different type of charge that comes in three different values, called "colors." These colors are whimsically called "red", "green" and "blue." Each quark has one of these color charges. The analogy to colors is as follows: it is observed that all observable hadrons are "colorless." There are two ways a hadron can be colorless. For a meson, with a quark-antiquark pair, one quark can be (say) "red" and the antiquark can be "antired", giving no net color. For a baryon with 3 quarks, each quark can be a different color, and, in analogy to color theory where a combination of three primary colors results in white (no color), the baryon is colorless. No other combination of colored quarks is colorless, and in fact, no other combination of quarks is actually observed. Now, a peculiar thing about QCD is that the gluons have color charge; in fact, each gluon has one color charge and one anticolor charge. The fundamental gluon interaction between quarks causes a transfer of color charge from one quark to another. For example, a red and blue quark exchange colors. The Feynman diagram for the color exchange looks like this:

^ ^

b \ / r

\ /

\ /

| = = = = = = |

/ r, _b \

/ \

r / \ b

This is a picture of the gluon exchange process. Think of time going upward. On the left, a red quark turns into a blue quark, emitting a (red, antiblue) gluon to conserve color charge at the "vertex." On the right, a blue quark absorbs the (red, antiblue) gluon to become a red quark. No statement is made about which of these processes happens first; the two events (gluon creation and absorption) occur in such a way that either one of them can happen first. (Note the close analogy to QED, where a virtual photon is transferred between two electrons.) In QCD, the virtual gluon is labeled as (red, antiblue), but it could just as well be labelled (antired, blue).

The consequences of having colored gluons mediate the color force between quarks are startling. The force is short range, like the weak force, but it increases with distance. The analogy is to a tube of energy: because the gluons are charged, and color charges attract, the gluons (and the field energy) are confined to a narrow tube between the quarks. If you try to pull two quarks apart, the tube gets longer, and the energy increases with the length. If the tube gets too long, it breaks with the generation of a quark/antiquark pair at the break, resulting in the creation of a meson (not the created pair, however, because they're now tied to separate gluons.) Try to draw this, using the diagram above, and with the assignment of the correct color to each of the quarks generated in the break in the gluon line. In a very high energy collision, it is common for the strong interaction to generate many mesons and baryons in this manner. For example, when electrons and their anti-paricles (called positrons) collide at high energy at the Stanford Linear Accelerator (SLAC), they annihilate to form jets of hadrons. A jet is a set of particles all moving in roughly the same direction from the same initial vertex. These jets are in fact a verification of the unusual property of the color force. The electron and positron annihilate to form a virtual photon, which immediately forms a very energetic quark/anti-quark pair. If the quark and anti-quark were to separate and become free, they would have naked color, which is not allowed. So as they separate, each one creates a jet of baryons moving generally in their original direction, by the QCD process described above. There are even 3-jet events, where, additionally, a gluon tries to escape and gets converted into a jet of hadrons.

We've seen that the strong force is really a color force between quarks. How does this give rise to the old "nuclear" force between hadrons? After all, if the baryons and mesons are all colorless, how can there be a force between them?

The answer is interesting. It turns out that the nuclear force is a very weak effect due to fluctuations in the color fields. Even though each hadron is colorless in some time-averaged sense, at any moment in time if you are very close to it, it will appear to have a small amount of color charge. Suppose you are right next to a proton. At some instant of time, the red quark might be closer than the blue and green quarks, so the proton will appear to be slightly reddish. Now, that net red color will attract the red quark in a neighboring proton, so that it will appear slightly red to the first proton as well. Another way of describing this is that a fluctuation in the color charges of one proton causes a corresponding color polarization in the second, and the two slightly polarized protons will then experience an attractive force. There is an analogous situation with the electromagnetic force: two neutral atoms will weakly attract each other at very close distances, from mutual polarization due to coherent charge fluctuations. This is called the van der Walls effect, after the physicist who described the weak electrostatic force between electrically neutral gas molecules that causes a real gas to behave slightly differently from an ideal, non-interacting gas. van der Waals modified the pV = nRT equation of state for an ideal gas to account for such interactions, as well as for the small volume occupied by each molecule.

We've talked about the leptons and quarks, and about the particles like the photon, W, Z and gluons that are responsible for the interactions between the leptons and quarks. Let's go back and look at these particles in a new way, depending on the amount of intrinsic angular momentum (also called spin) that they possess.

There is something very deep and important about these groups of particles. As mentioned above, the leptons and quarks are fermions. They have intrinsic angular momentum. The classical analogy is to a spinning top, but the angular momentum of these particles is a quantum mechanical property, and defies our classical intuition in a number of ways. Planck's constant is the fundamental unit of angular momentum (or spin). All leptons and quarks have 1/2 unit of spin; all the interaction particles have 1 unit of spin. Particles with half-integral spin are called fermions; particles with integral spin are called bosons. Unlike fermions, bosons such as photons have a tendency to get into the same quantum state. A laser is a device for putting trillions of photons into exactly the same quantum state, which can make a very intense, monochromatic and collimated light wave. These interaction particles are referred to as intermediate vector bosons. They intermediate between the fermions to cause the interaction; and they are vector particles because they have spin 1 and transform in a particular way under a Lorentz transformation (see below). They are bosons because the spin is an integral multiple (namely, 1) of Planck's constant. There is another fundamental vector boson, the quantum of gravity (graviton). This is expected to exist with spin 2, but it has not been observed because the interaction with matter is too weak at energies attainable (now or in the future) in particle accelerators.

To get an idea of how fundamental the fermion spin is, consider this. Suppose you have a particle with this spin 1/2 property. In quantum mechanics, if you try to measure the spin, you will find either +1/2 or -1/2 along any direction you choose: you choose a direction and it will be either "up" or "down." Nothing in between! In non-relativistic quantum mechanics, a particle with spin 1/2 will generally exist in a mixture of these two pure spin states. In a relativistic description, the electron wave function will have not 2, but 4 components. The great triumph of the Dirac equation was that it not only explained the electron spin but also predicted the existence of the positron. The wave function needed 4 components to describe both electrons and positrons! And Dirac needed 4 components to describe a wavefunction that satisfied the basic symmetry requirements of Einstein's relativity: energy conservation, momentum conservation, and something called Lorentz invariance , which just means a consistent description in different moving frames of reference (called Lorentz frames) of a spin 1/2 particle. So you see that there is a kind of inevitability: the symmetry requirements and special relativity forced Dirac to come up with his equation -- he really didn't have any latitude. There was only one unspecified parameter, the mass of the particle. And thus the Dirac equation describes the six leptons and the six quarks, all of which have different masses, plus their anti-particles.

Thus, it turns out that characterizing particles by their intrinsic angular momentum is very useful. The spin 1/2 fermions (leptons and quarks) are called spinors, and their wave function, which is the solution to the Dirac equation, has 4 components. A scalar particle has spin 0 and only one component. No fundamental scalar particles are known, though people have been searching for about 20 years for one called the Higgs boson . The Higgs particle is postulated to give mass to all the particles by interacting with them. All the particles responsible for interactions (except for gravity), such as the photon, the electroweak W and Z, and the color gluons, have spin 1. They are vectors in 4-dimensional space-time. The graviton, which is the quantum particle responsible for the gravitational interaction, is a tensor boson, having spin 2. These terms -- scalar, vector, tensor and spinor -- are well-defined mathematically, and describe how the wave functions for the particles change under the basic symmetry operations of translation, rotation, and boost. A boost is where you change the relative speed of the reference frames. The rotations and boosts are combined into a set called the Lorentz transformations; combining these with symmetry under translation in space and time (which are equivalent to momentum and energy conservation) gives you a larger set of symmetry operations called the Poincaré symmetry group. All the fundamental equations of physics must be invariant (i.e., not change in form) under all changes described by this group: translation, rotation and boosts.

The Lorentz transformations act on particles, but they were intitially discovered as a transformation on space and time in 1900 by Lorentz (who else?). However, their proper (and much simpler) interpretation was given in 1905 by Einstein, who at the same time derived the famous relation E=mc2, and also showed that Maxwell's equations of electromagnetism obey the basic symmetries required by special relativity.

Did Einstein get a Nobel prize for the special theory of relativity? No, he received a Nobel prize nearly 20 years later for his explanation of the photoelectric effect (electrons are emitted from a metal with an energy proportional to the inverse wavelength of the light, but independent of the intensity of the light), which he also produced in 1905. Einstein's starting point for relativity was to accept the results of two American scientists in Cleveland, Ohio. In the late 1880s, Michelson (who founded the physics department at Case and was the first American to receive a Nobel Prize in science) and Morley (a chemistry professor at Western Reserve) attempted to measure the speed of the Earth through the postulated "ether" that filled the universe. As the Earth goes around the sun, the speed of the Earth relative to this ether should change. They used an interferometer to compare the speed of light in two perpendicular directions, but to their surprise, they were unable to detect any difference regardless of the Earth's location in its orbit around the sun. Einstein accepted these measurements at face value and used their result in his basic postulate: that all frames of reference are equivalent. A corollary of this is that the speed of light is measured to be a constant independent of the motion of the source or the receiver. And the consequences of that are that space and time cannot be described independently. This is far from intuitive, because we live in a world where everything we see (except for light) goes much slower than the speed of light. Einstein's special theory of relativity was so different from the previous picture, where space and time were independent, that it took many years to become fully accepted. The greatest irony is that as late as the 1930s, there was still one prominent hold-out in the United States: Dayton C. Miller, the person who had been the chair of the physics department at Case Western Reserve for over 40 years! Miller spent his career trying to prove that both Einstein and (especially) his great predecessor Michelson were wrong; that there was an ether; that there was an absolute frame of reference in which the ether is stationary; and that the speed of light depends on the motion relative to the stationary ether. One might say that Miller was to physics as Florence Foster Jenkins was to opera.

And the answer is: yes!. The vector bosons that provide the electromagnetic forces (the photon), the weak forces (the Z0, W+ and W-), and strong forces (the gluons) all can be generated by requiring that the laws of physics are invariant to some new, internal symmetries. This is why the Standard Model has been such a success: it shows that (1) those symmetries generate the observed forces and quanta and (2) with such special symmetry-generated forces, the amplitudes and probabilities for all interactions are finite and properly behaved. Most theories of interacting particles are not well-behaved: they give infinities when you try to calculate energies and probabilities.

These internal symmetries are called gauge symmetries, and in 1971 t'Hooft and Veltman proved that gauge symmetric theories are renormalizable; i.e., well-behaved when you try to calculate measurable things such as scattering cross-sections. (A cross-section is the area that a particle appears to have in a collision with another particle.)

It had been known for many years that the theory of QED satisfied two gauge invariances. There is a global invariance that works like this. Suppose you multiply every lepton wavefunction in the universe by a constant phase, which is a complex number of unit length. (You can think of this complex number as a vector that points from the origin to somewhere on a circle of radius 1.) If you require that the laws of physics are independent of this global phase (or global gauge), you get the law of conservation of charge! And there is a local gauge invariance that is even stranger. If you assume that the laws of physics must not change if you multiply the wavefunction of every lepton in the universe by a phase that is an arbitrary function of position and time, you must have some new field to compensate for the rate of change of this phase with position or time, and that field is exactly the field of electromagnetism! (The electric and magnetic fields, which are directly measurable, are space and time derivatives of this gauge field.) So this internal symmetry that the wavefunction must satisfy automatically leads to a modification of the Dirac equation to include the interaction of leptons with photons. Everything seems to come out of symmetry.

What about the weak and strong interactions? The answer is that here, too, the Standard Model prescribes a local gauge symmetry (though more complicated than the one for electromagnetism) for both the weak and strong force. It also explains how the electromagnetic and weak forces are really part of a higher symmetry, the electroweak symmetry. The electromagnetic and weak forces only appear to be two separate forces because at low energies ("low" being less than about 100 GeV -- 100 times the energy of the proton, which is about 100 billion times the energy of a photon of visible light!) the symmetry is broken by a field that is postulated but has not yet been observed. This is the field of a particle called the Higgs boson, a very strange beast because if it exists it will be the only known elementary particle of spin 0. It is hoped that if the Higgs boson exists, it is not too heavy to be produced in the next generation of particle accelerators under development at CERN. These accelerators will be able to produce particles of several trillion electron volts (TeV), which is several thousand times the mass of the proton.

The strong interaction with its 3 colors and 8 gluons comes from a yet more complicated gauge symmetry, called SU(3) color, that is derived by assuming that the color force is independent of color. Then the red-red force is the same as the red-green force, etc. Historically, SU(3) was the symmetry group that Gell-Mann and Ne'eman had invoked in 1961 to explain in an approximate way the huge zoo of mesons and baryons that had been produced by that time within accelerators. In his colorful fashion, Gell-Mann called it the Eightfold-Way, because of the way the symmetry grouped some of the mesons and baryons into octets of related families. The Eightfold-Way theory was a mathematical description of the observed particles. Three years later Gell-Mann realized that the octets could be interpreted as a result of having the hadrons made up of fractionally charged entities, which he named quarks. The SU(3) symmetry came from the assumption that you had three types of quarks in the hadrons, labeled by their three flavors (up, down and strange), and the interactions between the quarks were assumed to be independent of their flavor. This was similar to the old "isospin" symmetry in the nuclear force between neutrons and protons. In this case, a force that is independent of flavor is said to have a SU(3) flavor symmetry. The SU(3) flavor symmetry is only approximate, but the Standard Model takes this same symmetry group to explain the strong force. It is called SU(3) color, and in the Standard Model it is an exact symmetry.

Lepton Charge Spin Mass(Mev) Lifetime(sec) ======================================================================= electron -1 1/2 0.511 stable electron neutrino 0 1/2 < 0.00002 stable? (*) ----------------------------------------------------------------------- muon -1 1/2 105.7 2.2 x 10^(-6) muon neutrino 0 1/2 < 0.16 stable? (*) ----------------------------------------------------------------------- tau -1 1/2 1777 2.9 x 10^(-13) tau neutrino 0 1/2 < 18 stable? (*) -----------------------------------------------------------------------

The charm, strange, top and bottom quarks all decay to up and down quarks by the electroweak interaction. The quantum numbers for the quarks -- S(strangeness), C(charm), T("truth" or topness), B("beauty" or bottomness) -- are conserved in all strong interactions, but they are not conserved in weak interactions. For strangeness, this "explains" (1) the associated production of strange hadrons -- hadrons with at least one strange quark -- by the strong interaction (i.e., they're always produced in pairs, having opposite S quantum numbers, to conserve total S), and (2) the weak decay of strange hadrons to protons, neutrons and pions, which are composed of up and down quarks only. The weak decay causes all quarks, which are always trapped in mesons or baryons, to eventually decay to up and down flavors. An isolated neutron (1 up, 2 down) is unstable because the down quark has slightly more mass than the up quark, allowing it to decay with a lifetime of 15 minutes to a proton (2 up, 1 down) plus an electron and an electron antineutrino.

Quark flavor Quantum # Charge Spin mass(Gev) Lifetime ========================================================================= down -- -1/3 1/2 0.31 stable up -- 2/3 1/2 0.31 stable ------------------------------------------------------------------------- strange S = -1 -1/3 1/2 0.5 decays charm C = +1 2/3 1/2 1.6 decays ------------------------------------------------------------------------- bottom B = -1 -1/3 1/2 4.6 decays top T = +1 2/3 1/2 180 decays -------------------------------------------------------------------------

What about the quark mass? Because quarks cannot be isolated, the quark mass cannot be measured. We give here the so-called constituent mass, which allows an estimate of the mass of the ground state hadron composites (i.e., the baryons and mesons with the lowest energy that are composites of these quarks) as the sum of these constituent values.

Why did I qualify the use of constituent mass to estimating the ground states of the hadrons? Remember the particle zoo, the proliferation of particles that were observed in the 1950s and 1960s? Most of those particles are resonances (i.e., excited states) of quark composites. For example, the lowest energy of the delta resonance is a set of four particles that are excited states of the proton, having charges -1 (ddd), 0 (ddu), 1 (duu) and 2 (uuu). In the delta, all three quarks have spin in the same direction, giving them a net spin of 3/2, unlike the proton and neutron which have net spin of 1/2. The delta has a mass of about 1230 Mev, considerably larger than the proton mass of 911 Mev. There are even higher energy delta resonances where the quarks have orbital angular momentum as well as their intrinsic spin.

We are talking here about spectroscopy, the quantized energy levels of composite systems: systems composed of two or more components that are bound together. There are four distinct spectroscopies in matter, starting at lower energies and proceeding to higher ones.

At each level, we go to smaller length scales and higher energies. The following table summarizes this semi-quantitatively. The range of transition energies is typical of the spectroscopy, and the photon wavelength corresponds to those energies.

Spectroscopy | Particles of | Transition | Photon

Type | Composite | Energies | Wavelength (cm)

=======================================================================

Molecular | atoms | 0.01 - 0.1 eV | 10^-2 - 10^-3

Atomic | electrons | 0.001 - 10 KeV | 10^-4 - 10^-8

Nuclear | nucleons | 0.1 - 10 MeV | 10^-9 - 10^-11

Hadron | quarks | 10 - 1000 MeV | 10^-11 - 10^-13

-----------------------------------------------------------------------

Note: there exist transition energies in atomic and molecular

spectroscopy that are at very much lower energies than given above.

These are due to weak coupling between the field from moving

electrons (electrons with orbital angular momentum) and the

magnetic moments of the electrons and the nuclei, called

fine structure and hyperfine structure, respectively.

It is also useful to apply static electric and magnetic fields

to the atomic systems.

For example, nuclear magnetic resonance is due to transitions

between electromagnetic energy levels of the atomic nucleus that

are split by the application of an external magnetic field.